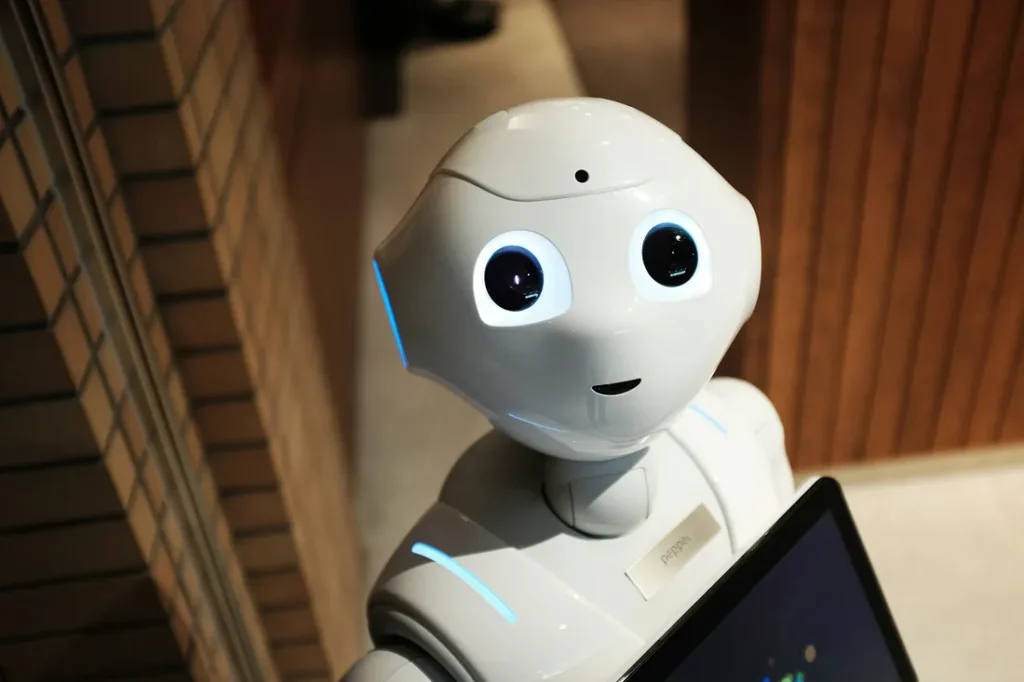

Artificial intelligence is only beginning to emerge in higher ed. Its unique ability to process large data sets, speed up responses, make predictions, and learn has the power to transform higher ed. As AI becomes more integrated in colleges and universities, what impact will this technology have on the way colleges teach, conduct research, and manage their operations?

As part of a two-part series of virtual forums examining the role of AI in higher ed, a panel of leading thinkers and practitioners in the use of AI will join The Chronicle for a discussion of AI and machine learning in higher ed’s future, including such issues as:

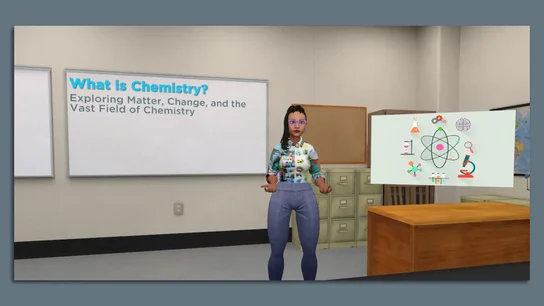

- How will AI benefit instruction, for both in-person and online classrooms?

- How can it help bridge equity gaps in learning?

- How can AI-powered virtual tutors help level the playing field?

With Support From University of Florida

Panelists will include:

- Fred H. Cate, Vice President for Research, Indiana University

- Dan Garcia, Teaching Professor in the Electrical Engineering and Computer Science Department, University of California at Berkeley

- Ashok Goel, Professor of Interactive Computing and Chief Scientist of C21U, Georgia Institute of Technology

- Marsha C. Lovett, Associate Vice Provost for Teaching Innovation and Learning Analytics, Carnegie Mellon University

- Morris Thomas, Director, Center for Excellence in Teaching, Learning, and Assessment, Howard University