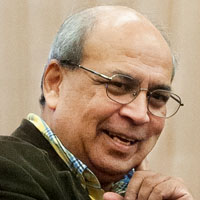

The AAAI Robert S. Engelmore Memorial Lecture Award, sponsored by IAAI and AI Magazine, was established in 2003 to honor Robert Engelmore’s extraordinary service to AAAI, AI Magazine, and the AI applications community, as well as his contributions to applied AI. The award is given to people who have demonstrated excellence in scholarship in AI, outstanding applications of AI, as well as extraordinary service to AAAI and the AI community.

Ashok Goel was honored for pioneering research contributions to biologically inspired design, case-based reasoning and applications of AI in virtual teaching, as well as for his extensive contributions to AAAI, including service as Editor in Chief of AI Magazine. Read more about Ashok’s aware talk at AAAI-26 here.

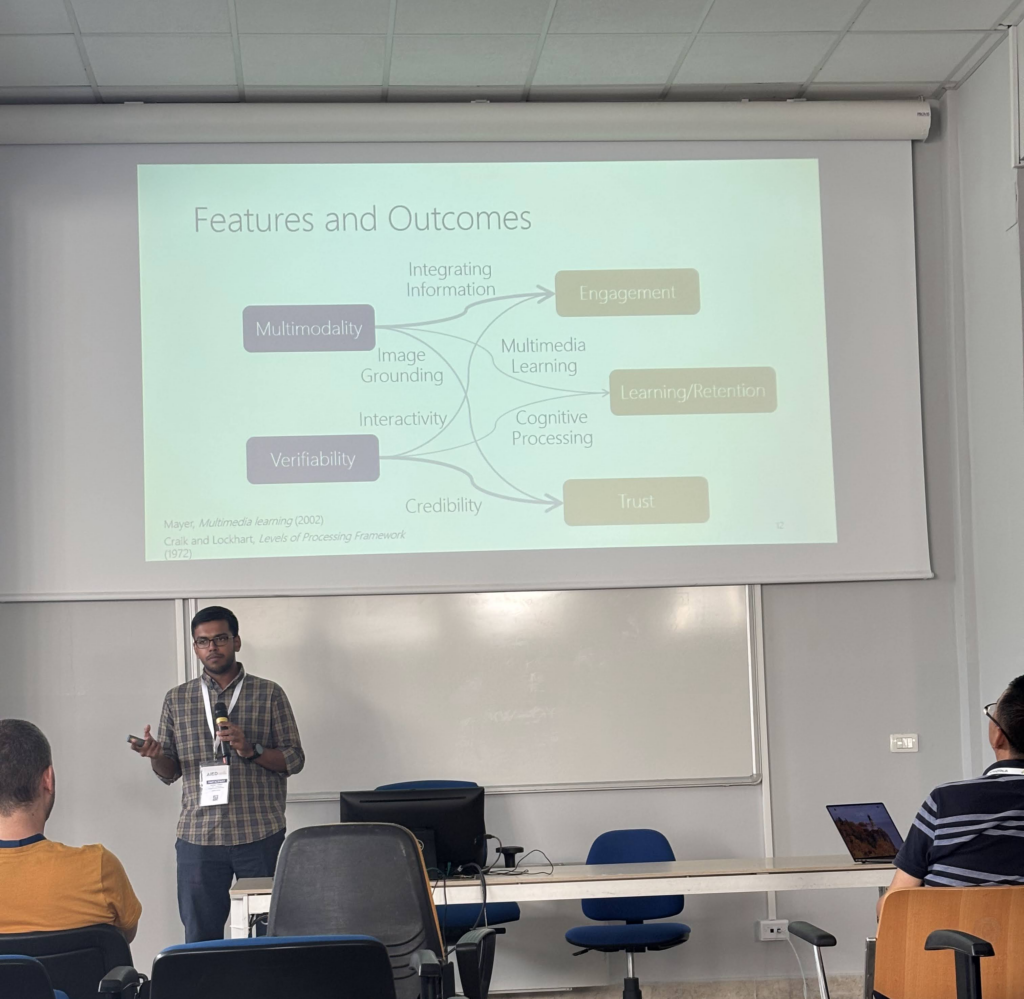

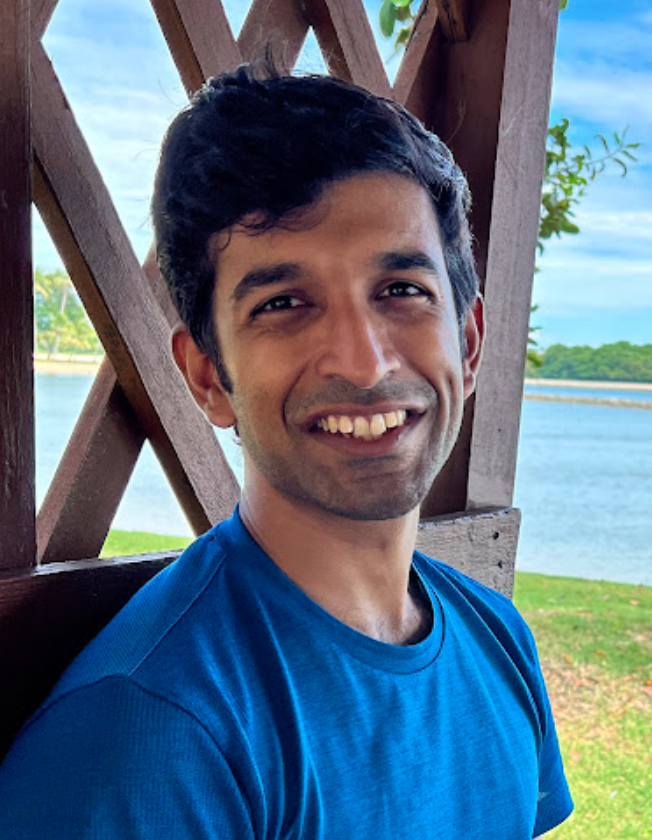

Joining Ashok at the 40th Annual AAAI Conference on Artificial Intelligence in Singapore was current DILab member Erik Goh and former members Jisu Kim and Ida Camacho.